The Problem: 75% of Companies Ban ChatGPT

Despite initial enthusiasm for generative AI tools like ChatGPT, companies are restricting their use due to growing data privacy and cybersecurity concerns. The primary worry is that these AI tools store and learn from user data, potentially leading to unintended data breaches. Although OpenAI, the developer of ChatGPT, offers an opt-out option for training with user data, the handling of data within the system remains unclear. Furthermore, clear legal regulations regarding responsibility for AI-caused data breaches are lacking. Consequently, companies are hesitant and waiting for the technology and its regulation to evolve.

Microsoft has warned its employees against sharing sensitive data with ChatGPT, the chatbot developed by OpenAI Source . Concerns arise that confidential information could be inadvertently shared with the chatbot, potentially leading to its dissemination to other users. Microsoft’s cautious approach is notable considering the company’s partnership and investment in OpenAI. Amazon has also issued a similar warning to its employees. Responsibility for protecting confidential data remains unclear, and clearer guidelines and regulations for such situations are needed. OpenAI’s terms of service permit the company to use all inputs and outputs generated by users and ChatGPT, though personal data is intended to be removed. However, concerns persist regarding the potential for extracting private company data through cleverly crafted input prompts.

The Solution: Self-Hosted AI Models

A solution to the data security and privacy concerns surrounding AI models is to host these models on your own servers. This allows companies to retain control over their data and ensure it doesn’t fall into the wrong hands. Self-hosted AI models offer a secure and trustworthy way to utilize AI technology without the need to worry about data privacy and cybersecurity concerns.

We will use Ollama and Chatbox. Ollama is a streamlined tool for running open-source large language models (LLMs) like Mistral and Llama 2 locally. Chatbox is an application that visualizes API calls to various models.

Does this sound too strenuous? Or would a hosted API be better? Use document-chat.com or schedule a free introductory call with us .

Step 1: Ollama Download and Installation

You’ll need a server or computer with a GPU. Unfortunately, this must be an NVIDIA GPU with at least 8GB VRAM, or an Apple Mac with an M-series chip. Find the exact requirements in this post: What are the advantages of self-hosted AI models?

TLDR version:

- https://ollama.com/download

ollama run llama2- ollama is accessible under localhost:11434

What is Ollama?

Ollama is a streamlined tool for running open-source large language models (LLMs) like Mistral and Llama 2 locally. Ollama bundles model weights, configurations, and datasets into a single entity managed by a model file.

Does this sound too strenuous? Or would a hosted API be better? Use document-chat.com or schedule a free introductory call with us .

Ollama supports various LLMs, including LLaMA-2, uncensored LLaMA, CodeLLaMA, Falcon, Mistral, Vicuna, WizardCoder, and Wizard uncensored.

Ollama supports a range of models, including Llama 2, Code Llama, and others. It bundles model weights, configurations, and data into a single unit, defined by a model file.

The five most popular models on Ollama are:

- llama2: The most popular model for general use.

- mistral: Mistral AI’s 7B model, updated to version 0.2.

- codellama: A large language model that utilizes text inputs to generate and discuss code.

- dolphin-mixtral: An uncensored, fine-tuned model based on the Mixtral MoE, particularly suitable for coding tasks.

- mistral-openorca: Mistral 7b, fine-tuned with the OpenOrca dataset.

Ollama also supports custom models. You can create a model using a model file, specifying layers, writing weights, and receiving a success message.

Other available models include:

- Llama2: Meta’s foundational “open source” model.

- Mistral/Mixtral: A 7 billion parameter model fine-tuned on the Mistral 7B model with the OpenOrca dataset.

- LLaVA: A multimodal model (Large Language and Vision Assistant) capable of interpreting visual inputs.

- CodeLlama: A model trained on code and natural language in English.

- DeepSeek Coder: Trained on 87% code and 13% natural language in English.

- Meditron: An open-source medical model adapted from Llama 2 to the medical domain.

Installation and Setup of Ollama

- Download Ollama from the official website.

- Installation is straightforward, similar to other software. For macOS and Linux, use

curl https://ollama.ai/install.sh | sh. - Ollama creates an API for interacting with the model locally.

- Ollama is compatible with macOS and Linux; Windows support is coming soon.

Running Models with Ollama

Running models is simple. Download models and execute them using the “run” command in the terminal. If a model isn’t installed, Ollama downloads it automatically. For example, run CodeLlama with “ollama run codellama”.

Models are saved in the ~/.ollama/models directory. When downloading a model via “ollama pull”, it’s stored in ~/.ollama/models/manifests/registry.ollama.ai/library/

Ollama also offers REST API access for real-time interactions.

Additional Features

Ollama supports importing GGUF and GGML model files, allowing for model creation, iteration, and sharing.

Available Models

A list of available models can be found on the Ollama Model Library page.

In summary, Ollama provides a user-friendly platform for running LLMs locally. It’s a valuable resource for developers, researchers, and AI enthusiasts.

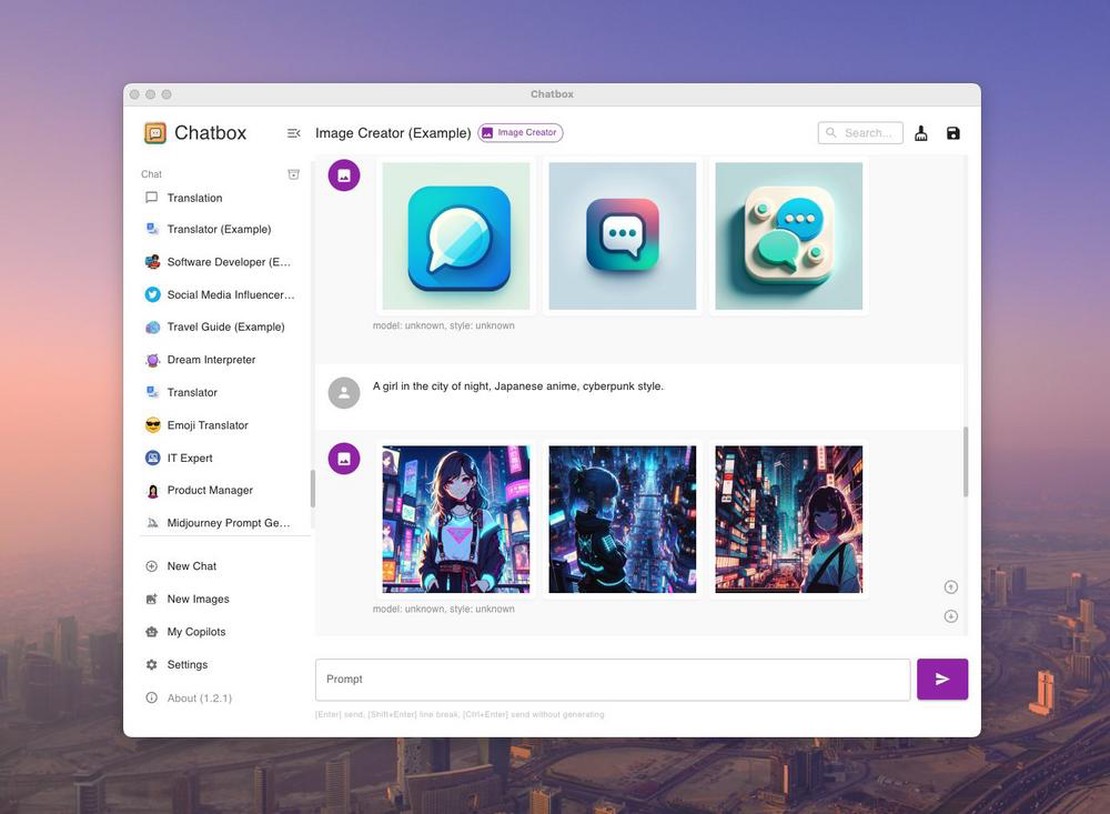

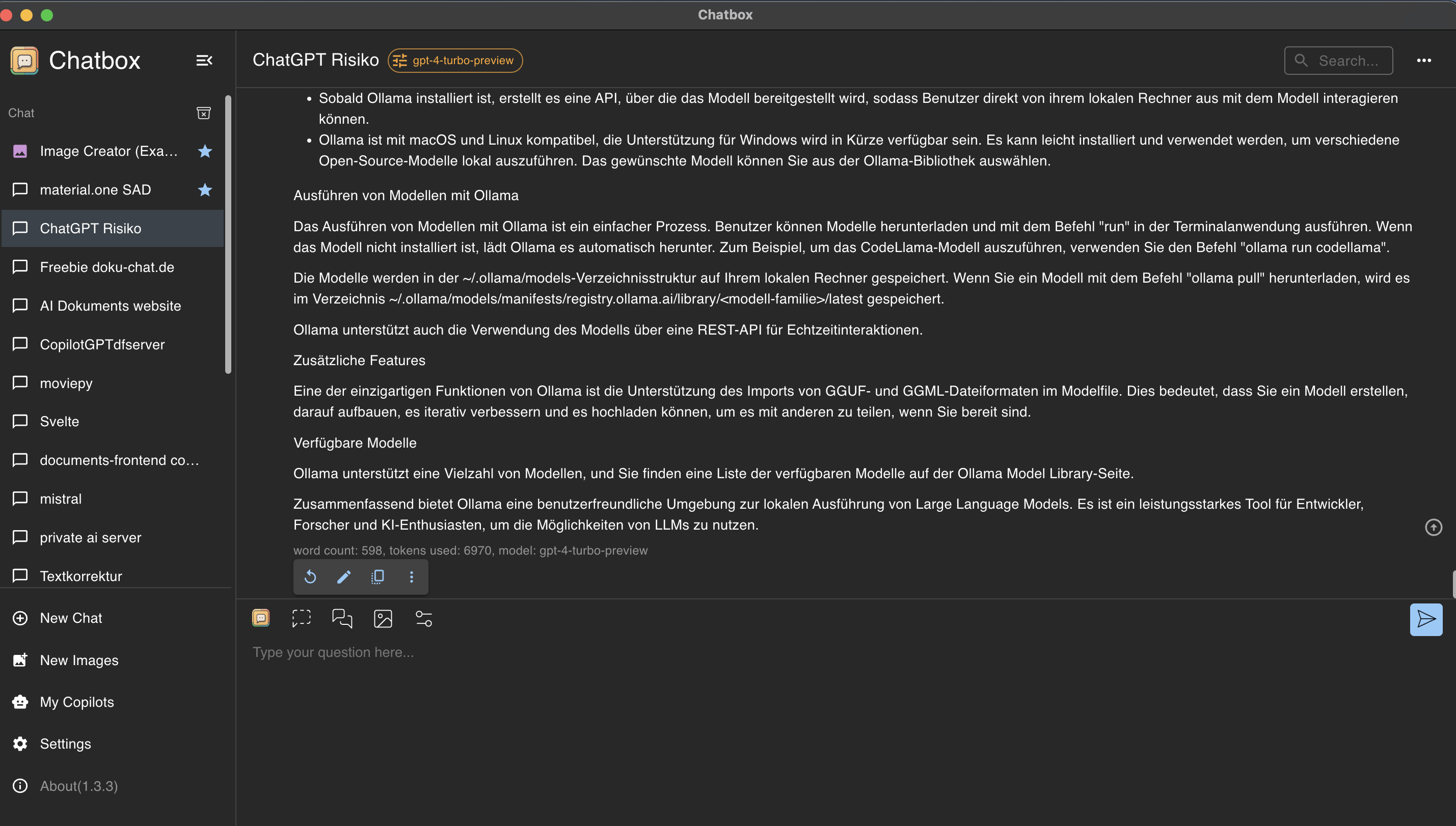

Step 2: Controlling and Utilizing Ollama with the Chatbox GUI

Chatbox is an application that visualizes API calls to various models.

Chatbox Installation

Visit https://chatboxai.app and install the application on your computer.

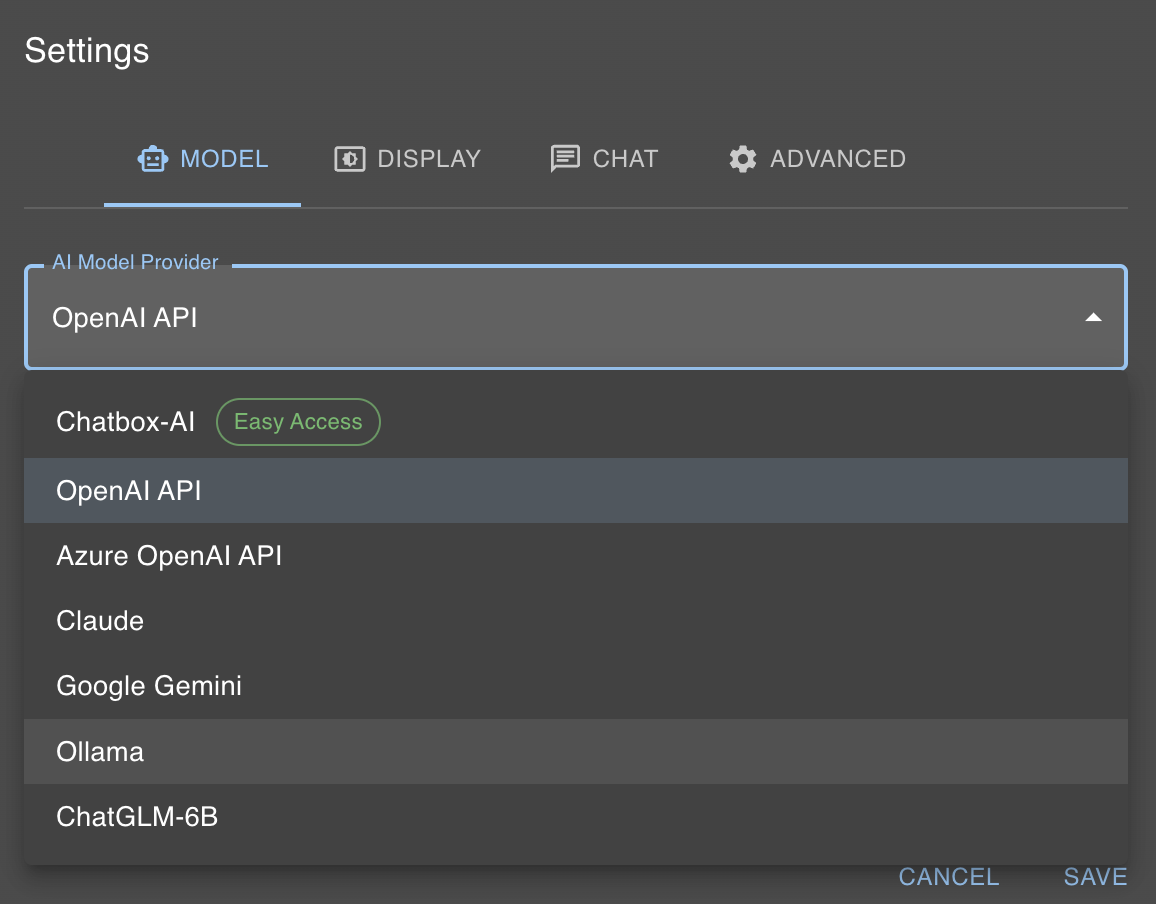

Connecting Chatbox and Ollama

Our local AI model is accessible at localhost:11434. Chatbox allows easy interaction with Ollama. Click “Settings” and select Ollama as the “AI Model Provider”.

Then, select the desired model (e.g., llama2) from the model dialog.

Does this sound too strenuous? Or would a hosted API be better? Use document-chat.com or schedule a free introductory call with us .