Introduction

Large language models like GPT (Generative Pre-trained Transformer) have become powerful tools for a wide range of applications in the artificial intelligence (AI) world. However, companies should be cautious about relying too heavily on these models. While they offer accuracy and scalability, they also have limitations. They may produce biased or incorrect responses due to limitations in their training data, and they are not capable of fully understanding human context and nuances.

This blog post will explore the benefits of self-hosted AI models. Self-hosted models provide businesses with greater control over their AI infrastructure, leading to improved performance, adaptability, and data privacy. By hosting the model on their own servers, companies can ensure that their proprietary data remains under their control and address concerns about data security and privacy.

However, self-hosting also presents challenges. It requires significant investment in hardware and expertise to manage and maintain the infrastructure. Furthermore, the responsibility for updating the model to incorporate new information and for protecting against security vulnerabilities rests entirely with the company. Given the rapid advancements in AI and cybersecurity, this can be a demanding task.

Overall, large language models represent a significant advancement in AI, offering companies the potential for innovation and increased efficiency. But their limitations and the complexity of fine-tuning and self-hosting require a cautious approach. Companies need to weigh the advantages of these powerful tools against the risks of over-reliance, inaccuracies, and ethical concerns. By doing so, they can leverage the potential of self-hosted AI models while mitigating the risks associated with these groundbreaking technologies.

Why Not Simply Use a Cloud Model?

There are several reasons why businesses and individuals might choose to self-host AI models. One of the primary reasons is data security. When using models from providers like OpenAI, there’s a chance that the input data might be used as training material. By self-hosting, companies can ensure their sensitive data remains under their control and is not used for training purposes.

Tip: To avoid your data being used by American AI providers like OpenAI, consider operating a “self-hosted” AI model. This requires a server with an Nvidia GPU and the appropriate “torch” setup. For DIY, the open-source project Ollama (https://ollama.com ) is recommended. Alternatively, consider a German KI provider such as document-chat.com (https://document-chat.com ) or DataFortress Cloud (https://datafortress.cloud ). document-chat.com also offers project-based organization, useful sharing/team features, and more!

Another important aspect is cost savings. Self-hosted models are typically free or require minimal licensing fees compared to the high costs associated with using third-party models. This makes self-hosted models a financially attractive option for businesses of all sizes.

Privacy protection is another crucial factor. By self-hosting, companies retain control over their data and can ensure it is not shared with third parties. This is particularly important in industries with strict data privacy regulations.

In summary, self-hosting AI models offers advantages such as improved data security, cost savings, and privacy protection. Companies can maintain full control over their AI infrastructure while benefiting from the advantages of this powerful technology.

What Do I Need to Operate a Self-Hosted AI Model?

To operate a self-hosted AI model, you require specific resources. A crucial component is an Nvidia GPU server, as GPUs provide high processing power for training and running AI models. However, it’s important to note that Nvidia GPU servers can be quite expensive, representing a considerable investment.

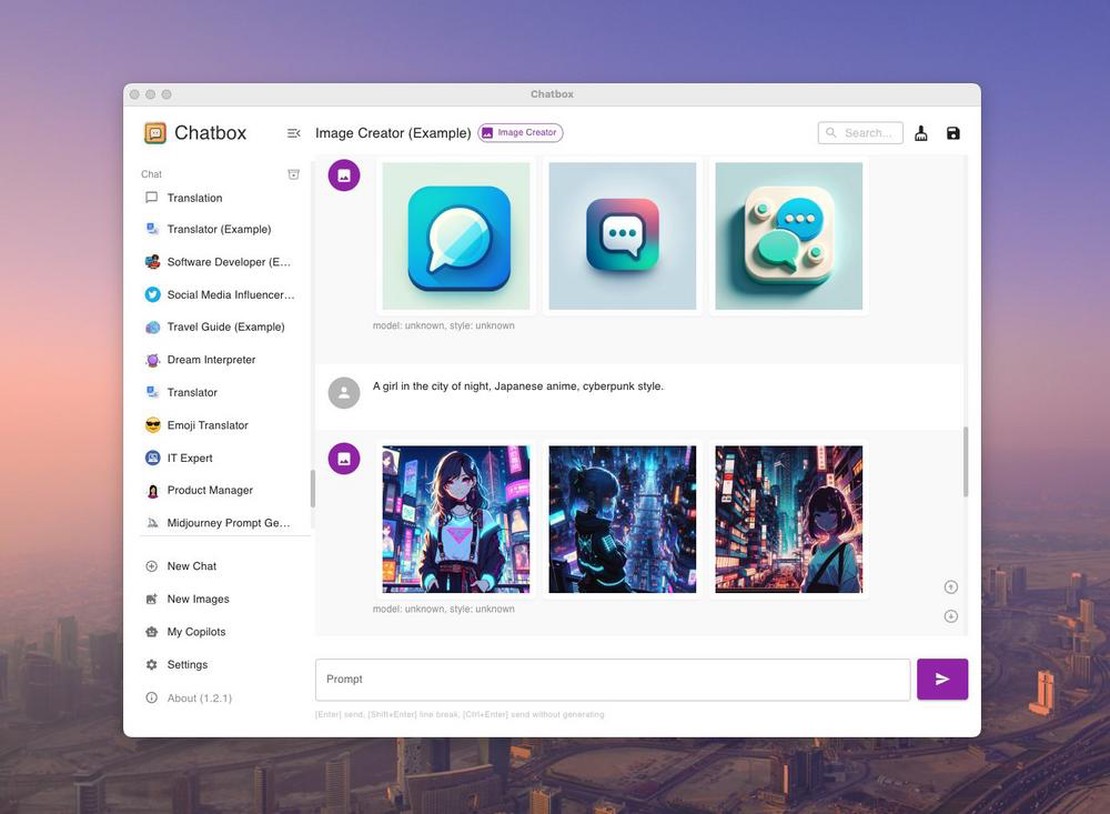

Fortunately, there are open-source projects, such as Ollama (https://github.com/ollama/ollama) , which simplify model hosting. Ollama offers an easy and user-friendly way to host models yourself. It provides tools and resources to simplify and automate the self-hosting process.

With Ollama and a suitable Nvidia GPU server, you can run your own AI model and benefit from the advantages of self-hosting without the full financial burden of a dedicated server.

It’s crucial to acknowledge that operating a self-hosted AI model still requires technical expertise. Having knowledge of machine learning and server administration, or consulting experts, can be helpful in setting up and maintaining the system.

With the right resources and tools like Ollama, you can run your own AI model and leverage the benefits of self-hosting to maintain control, safeguard data, and save costs.

And What Kind of Server Do I Need for the Models?

First, assess your requirements. “Coding assistants” like GitHub Copilot require less resource and can operate with a GPU having ~8GB VRAM. For larger models behaving similarly to ChatGPT, at least 16 GB VRAM is needed. If you anticipate performance on par with GPT-4, you’ll need more substantial resources, ideally a GPU cluster with at least 64GB VRAM.

A small overview of models and their memory requirements is presented below:

| Model | Parameters | Required VRAM |

|---|